Running Fastai book Lesson 1 on Colab

The fastai course is complemented with a Jupyter based fastai book. I tried the fastai book lesson 1 on Colab.

I tried out the fastai book lesson 1 on Colab. The colab links for each chapter of the book is provided in the project README under the Colab section. This is the “recommended approach” for working with the fastbook.

Use the T4 GPU Hardware accelerator

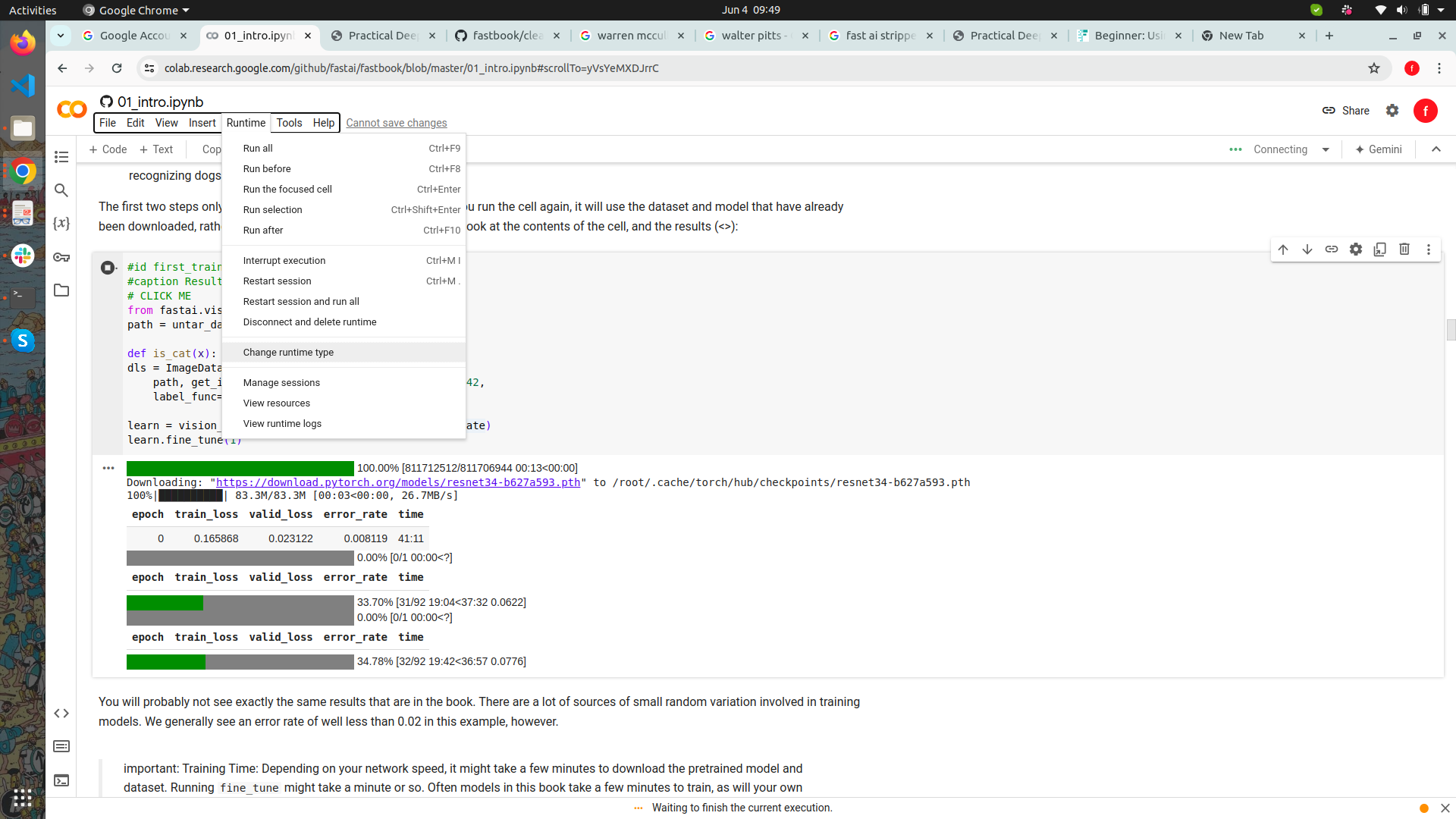

The lesson starts with a training and tuning a data model. My first problem going through the lesson was the speed of execution. In the screen shot below the training had taken up about 40 minutes.

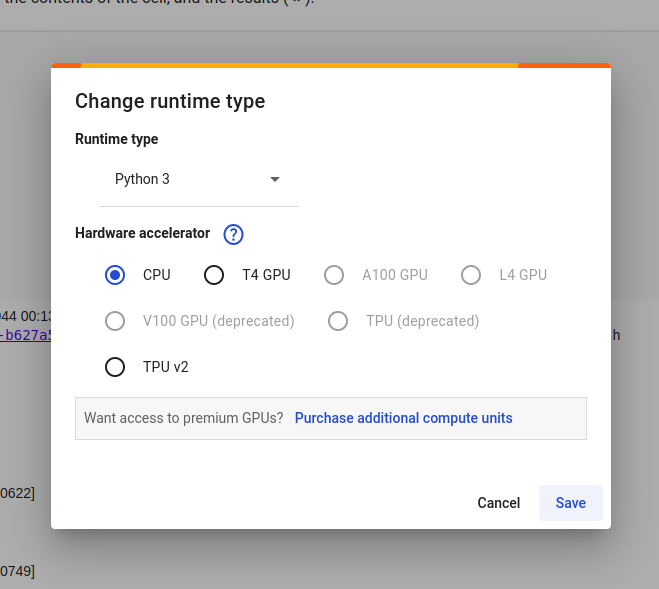

Going through the issues I found my answer. On checking my runtime type it was set to Python3 and hardware accelerator was set to CPU. The hardware accelerator settings are found in the runtime type settings

As suspected the hardware accelerator was set as CPU.

Change the setting to T4 GPU.

With this setting change the speed of execution improves and I’m able to execute the cell in a few minutes:

Cat vs Dog

The part of the lesson I found interesting was the use of an already trained convolution neural network (CNN) to create a cat and dog classifier. The classifier is created by downloading resnet34 which is a type of CNN with 34 layers which is already trained on 1.3 million images and adapting that model to recognize the 37 different breeds of cats and dogs using the Oxford-IIT Pet Dataset.

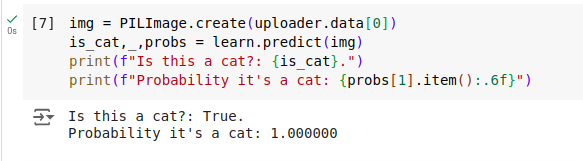

The resulting model can be tested by downloading a random cat picture from the internet:

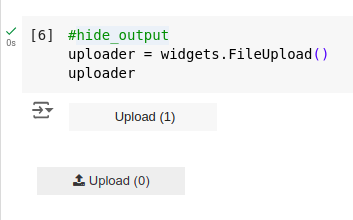

The downloaded image is to be uploaded using the uploader widget:

The tuned model was able to detect my random cat:

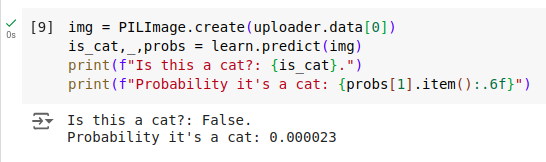

Now downloading a random dog image:

The tuned model was able to detect my random dog:

Some theoretical sections

The next sections in the book go through the following theoretical questions:

- What is machine learning?

The training of programs developed by allowing a computer to learn from its experience, rather than manually coding the individual steps.

- What is a neural network?

Neural networks are special because they are highly flexible, which means they can solve an unusually wide range of problems just by finding the right weights. This is powerful, because stochastic gradient descent provides us a way to find those weight values automatically.

- Deep learning jargon

The functional form of the model is called its architecture (but be careful—sometimes people use model as a synonym of architecture, so this can get confusing).

The weights are called parameters.

The predictions are calculated from the independent variable, which is the data not including the labels.

The results of the model are called predictions.

The measure of performance is called the loss.

The loss depends not only on the predictions, but also the correct labels (also known as targets or the dependent variable); e.g., “dog” or “cat.”